Safety management

What is Safety?

While conducting their business, organizations have a moral and ethical responsibility to make a positive contribution to society. Safety is one important aspect of this responsibility.

There are many definitions of Safety. A widely accepted one is the freedom from the occurrence or risk of injury to, danger to, or loss of human life. Leading organizations will encompass mental, social, emotional, and spiritual risks humans face under a Health and/or Well-being portfolio. Safety concerns equally apply to the risk that physical assets such as property, structures, tools, equipment, vehicles encounter.

There are also differing views about Safety as an entity. Some believe Safety is created like a product or service. Others think that Safety is more like air - omnipresent and subject to contamination if not carefully managed. A new view sees Safety as an emergent property of a complex adaptive system.

Evolution of Safety Thinking

Thinking about Safety has evolved over time. The evolution has paralleled how business and industry leaders thought about maximizing the value of the human workforce.

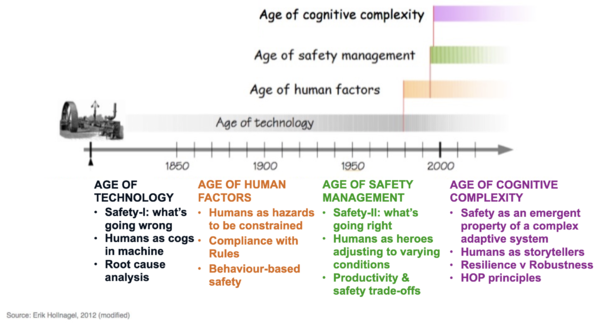

The graphic is a modified version developed by Erik Hollnagel and shown in his 2014 book Safety-I and Safety-II. In 1998 Andrew Hale and Jan Hovden described the 3 ages in the development of safety thinking: Technology, Human Factors, and Safety Management. This evolutionary path could now be extended with a fourth age (Cognitive Complexity).

Age of Technology

Classical Management theory has dominated business operations for well over 100 years. When Bureaucracy, Scientific Management (Taylorism), and Fayolism were introduced, they were openly accepted as a “new view”. Business owners and their subordinates were entrained to think mechanistically and thus treat humans as mere cogs of a machine. People were seen as error-prone and machines had to be guarded against workers to keep production lines running. The focus was on avoiding what goes wrong and keeping the number of adverse outcomes as low as possible. Recording lost-time frequency and severity became the statistical measures for Safety. Hollnagel coined this rather negative view of safety as Safety-I.

While these business concepts have their drawbacks and criticisms, they did fill a huge need back then organizing people to productively get work done.

Age of Human Factors

Productivity soared but eventually peaked due to human limitations and imperfections ("Dirty Dozen"). The age of Human Factors marked the arrival of Systems Thinking with Peter Senge's seminal book The Fifth Discipline. Humans were treated slightly more favourably, no longer as cogs but as hazards who could be controlled with rules, inspection, audits. Two nuclear power plant accidents (Three Mile Island and Chernobyl) placed more attention on the fallibility of humans. From the reports a new term was spawn: 'Safety Culture'.

Age of Safety Management

This is the era when businesses engaged systems thinking from an engineering perspective. Business Process Re-engineering (BPR) popularized the mantra faster, better, cheaper and applied linear reductionism to break down a system into parts: people, process, and technology. Hollnagel questioned why so much time and money was being spent on accident investigations. To be more proactive, he introduced the concept of Safety-II and learning from what is going right, which happens most of the time. Acknowledgement was given that workers cannot control everything and often a workaround is required to get the job done. So the idea of 'Performance Variability' was born; a human is not a hazard but a hero who could vary performance in order to adjust to changing conditions in the work environment.

Age of Cognitive Complexity

The latest age once again shift the thinking on safety as new research findings in cognitive and complexity sciences are applied to make sense of safety. All organizations are viewed as complex adaptive systems (CAS). Safety is defined as an emergent property of a CAS. Humans do not create Safety; they create the conditions that enable safety to emerge. For example, safety rules are useful because they create the conditions for safety to emerge. However, if more and more rules are added on, a worker goes into cognitive overload. What emerges is Danger in the form of pressure, frustration, distraction. And if a complexity phenomenon called a tipping point is reached, an unintended negative consequence (called "Failure") could unexpectedly happen.

Another insight in cognitive science destroys the myth that a human brain functions as a computer, a logical information-processing machine. The brain's strength is not storing and retrieving information but recognizing patterns. Humans are homo narrans, i.e., natural storytellers. When telling safety stories or narratives about past events, a worker will share feelings and emotions that direct questions would/could not reveal. Stories are also better to describe complex situations since they can provide context. In essence, stories and their underlying patterns generate a picture of the organization's safety culture.

Safety-I serves users well in maintaining the number of accidents at an acceptable level. The key to success is identifying known risks. However, Erik Hollnagel points out that this is also Safety-I’s weakness: “We can guard against everything that we can imagine. But we cannot imagine everything. Therefore, we cannot guard against everything.” Safety-II attempts to rely on human judgment and experience to adjust for behavior in the absence of explicit risk procedures, or when rules get in the way, or when unexpected changes in working conditions signal danger emerging.

The current dominant paradigm remains Safety-I. Most Safety Regulators carry out their duties through a Safety-I lens. It explains why accident investigations search for human error and end up attaching blame to workers. Management to look good in the eyes of the Safety Regulator (and avoid punishment) will pile on new or revised safety rules onto the backs of fatigued workers and add to the compliance inspection checklists of overburdened supervisors.

Anthro-complexity approach to Safety

Each era has ushered in a “new view of safety”. When the method of statistical process control was launched in the early 1920s, failures became a number to measure. In 1931 Herbert Heinrich parlayed numbers into a “scientific approach” in his Accident Prevention book. When he introduced his Domino Theory to explain accident causation, it would have been considered a “new view” for that era.

In the 21st century, “new safety” has become an endeavour to shift from the safety paradigms that have dominated for the past century and see people in a different light - as solutions, not problems.

The anthro-complexity approach for “new safety" is a view that blends complexity science, cognitive science, and the social science of anthropology - the field of ethnography. Safety is not a product to be created nor a service to be delivered. Safety is the emergent property of a complex adaptive system (CAS). The aim is to adapt system constraints and work conditions to enable safety to emerge from the relationships and interactions among people, machines, events (e.g., COVID-19), and ideas (e.g., Zero Harm). Humans are treated as storytellers.

Perceiving Safety as an emergent property of a complex adaptive system is a not new idea. Richard Cook opined it in 1998 in his paper How Complex Systems Fail. Other safety academics and professionals advocating this paradigm are listed in a safetydifferently.com article.

Safety and the 3 Basic Systems of the Real World

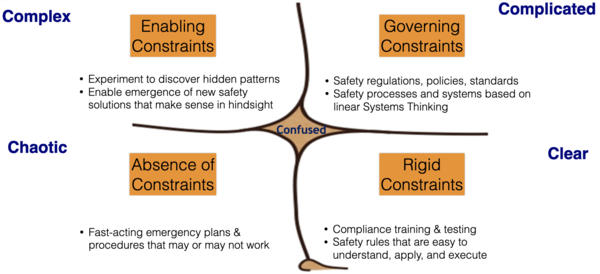

Cynefin allows us to distinguish between three different kinds of systems.

- The Ordered system is governed and constrained in such a way that cause and effect relationships are either clear or discoverable through analysis. Safety-I is in the Order system. It’s where safety regulations, policies, standards, rules, compliance checking, auditing, best practices reside. Safety-I is about strengthening Robustness, the capacity to take an unforeseen hit and survive without changing.

- The Chaotic system has no effective constraints, turbulence prevails and immediate stabilizing action is required. When an accidental failure such as a machine/tool/equipment breakdown or personal injury occurs, this is typically characterized by a plunge from the Order into the Chaotic system. Failures are always a surprise; something unexpectedly happened to cause the system to physically change. Minor failures are slips, trips, finger cuts while at the other end of the spectrum are catastrophes, disasters, fatalities. Major incidents in popular vernacular are called Black Swans and Black Elephants. The latter is a major event that is known, just not when or how big.

- The Complex system is where causal relationships are entangled and dynamic and the only way to understand the system is to interact. Complex systems in nature such as a tornado, wildfire, or lightning storm eventually dissipate their energy and extinguish. Living organisms survive by mutation or adaptation. Safety-II is the ability to succeed under varying conditions. Safety-II is about building Resilience. It’s making performance adjustments to match current conditions thus enabling safety to emerge but being on alert if danger inadvertently emerges.

Safety Context in Cynefin

An excessive focus on the avoidance of accidents has resulted in the strong belief that safety is an ordered system. Company safety professionals as experts residing in the Cynefin Complicated domain interpret safety acts, laws and regulations passed down by the industry safety regulators. They then turn them into governing constraints: policies, standards, systems, rules, and procedures. Rigid ones, like Golden Safety Rules, are placed into the Clear domain and join highly constrained procedures called Best Practices.

Analytical methods such as Root Cause Analysis work well in the mechanistic Order system (Cynefin Complicated and Clear domains). Reductionism looks "down" at a system and breaks it into its components (e.g., people, process, technology). Each part can be analyzed, fixed, repaired, improved independently.

Sense-making in the Cynefin Complex domain looks "up" at the system as a whole to see narrative patterns that emerge due to system constraints. When analyzing Safety-I + Safety-II type stories as a collection, the brain is able to recognize patterns of behaviour that might explain why people behave the way they do. Workarounds, rule-bending, or even rule-breaking to get the job done, are “weak signals” that one or more Clear domain safety rules are insufficient to deal with variability and diversity of daily work. Other humanistic patterns may include team rituals, crew taboos, unwritten rules, sacred cows, and tribal codes.

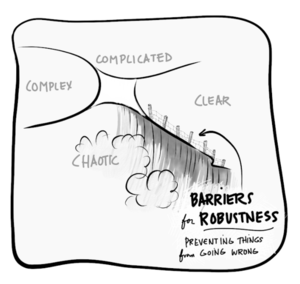

Safety Strategy = Strengthen Robustness + Build Resilience

Robustness is the ability of a system to resist change. It is a highly desirable property of the Order system. In safety, a fall into the Chaotic domain typically relates to human accidents and failures - machines, equipment, tools, vehicles, facilities. Both minor scrapes and disasters show up as surprises and people are always caught off guard.

Safety-I is associated with Robustness. Examples of safety barriers in the Clear domain would be personal protective equipment, safety rules, grounding apparatus, hazard signs, and mechanical warning devices. Strengthening system robustness involves hardening assets like buildings & facilities, deploying stronger materials, trucks, and tools that can take a beating and keep on performing. When dealing with Known Unknowns in the Cynefin Complicated domain, experts identify the risks, assess the probabilities of failure, estimate consequences in terms of frequency and severity, and develop causal mitigation plans.

Barriers for robustness are typically rigid and fixed. This means when they break down or fail, the results are usually disastrous. Just think of a dam holding back the pressure of gravity to create a reservoir. If a breach in the wall is not immediately repaired, the dam will eventually collapse sending tons of water rushing down into the valley below. This is why strengthening robustness is not enough. A safety strategy must also build resilience.

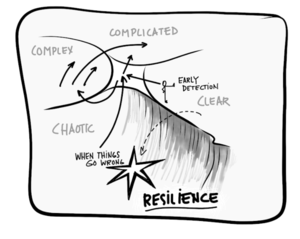

Safety-II is associated with Resilience. In the anthro-complexity approach, building resilience focuses on three capabilities:

Fast recovery

This is the most common definition for resilience: bouncing back to the original operating point. It is a reactive response after a failure has occurred. The decision-making method in the Chaotic domain is Act-Sense-Respond. Act quickly to stabilize the bad situation; sense what options are available; respond by taking action. For example, a vehicle has crashed into an electric utility pole causing a power outage. An emergency restoration crew acts quickly to extinguish dangers such as live wires or a car fire and erect public barricades. The situation is now stabilized and moves from the Chaotic to the Confused domain. Why confused? Because why the accident occurred may be still uncertain. However, in fast recovery, the objective is to get the lights back on so the decision to return to the Clear domain is made.

The downside is if system constraints and patterns are not changed, it sets up the risk of a repeat failure in the future, a concern called practical drift, normalization of deviance or drifting into failure.

Speedy exploitation of an emerging opportunity

After stabilizing a failure, other available options include analyzing with causal experts in the Complicated domain or probing to understand in the Complex domain. Serendipity is the emergence of a positive opportunity from a sudden failure. To illustrate using the vehicle accident, utility engineers seize the chance to relocate the pole to eliminate future collisions. Past efforts were fruitless as customers fervently objected for aesthetic reasons. However, when people recently experience the agony of a power outage, resistance disappears and the pole is relocated with customer blessings. An adjacent possible, a stepping stone from the current state of disposition, emerged and was exploited.

Early detection to avoid a plunge into the Chaotic domain

Proactive resilience is detecting weak signals in the Order system that indicate the nearness of a dangerous tipping point. The early detection pathway is through the Confused domain to the Complex domain to make sense of the system as a whole, work with the present state, and work with small actions to create conditions for significant improvements. Changes are then documented in the Complicated domain and training provided in the Clear domain.